Introducing FrAMBI: A new framework for auditory modelling

Understanding how humans perceive sound is a complex challenge, and scientists historically rely on computational models to describe the perceptual mechanisms behind listeners’ behaviour and their neural underpinning.

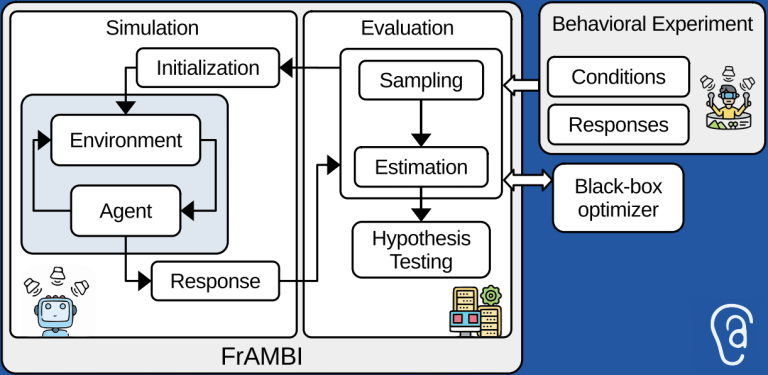

To address the need for standardised and reproducible methods, a new SONICOM publication introduces FrAMBI, a MATLAB/Octave framework that applies Bayesian inference to model auditory perception and behaviour.

FrAMBI provides researchers with a statistical framework for implementing and evaluating auditory models, ensuring consistency across studies. By structuring models around the perception-action cycle, FrAMBI simulates how listeners dynamically engage with sound sources, enabling hypothesis testing and computational statistical analysis.

“We created FrAMBI to provide the auditory community with a common statistical methodology to generate empirical evidence through auditory models,” says Dr Roberto Barumerli, the publication’s lead author based at the Austrian Academy of Sciences and the University of Verona. “We hope that the toolbox will foster reproducible research in psychoacoustics and auditory neuroscience.”

The new publication presents FrAMBI’s theoretical foundation and demonstrates its capabilities through examples of increasing complexity in sound source localisation tasks:

- Implementation of a basic static scenario;

- Evaluation of dynamic scenarios with a moving sound source;

- Comparison of model variants testing neural mechanisms for vertical localisation.

FrAMBI is integrated into the widely used and SONICOM-supported auditory modelling toolbox (AMT), allowing it to be maintained long-term and expanded accordingly.

To learn more about FrAMBI and its possible applications, read the full publication: https://link.springer.com/article/10.1007/s12021-024-09702-5

To experience FrAMBI firsthand—download AMT 1.6, load it in MATLAB, and run exp_barumerli2024(‘basic’) to explore its capabilities.